A Differentiable Recurrent Surface for Asynchronous Event-Based Data

Summary: We propose a mechanism to efficiently apply a Long Short-Term Memory (LSTM) network as a convolutional filter over the 2D stream of events produced by event-based cameras in order to accumulate pixel information through time and build 2D event representations. The reconstruction mechanism is end-to-end differentiable, meaning that it can be jointly trained with state-of-the-art frame-based architectures to learn event-surfaces specifically tailored for the task at hand.

Abstract: Dynamic Vision Sensors (DVSs) asynchronously stream events in correspondence of pixels subject to brightness changes. Differently from classic vision devices, they produce a sparse representation of the scene. Therefore, to apply standard computer vision algorithms, events need to be integrated into a frame or event-surface. This is usually attained through hand-crafted grids that reconstruct the frame using ad-hoc heuristics. In this paper, we propose Matrix-LSTM, a grid of Long Short-Term Memory (LSTM) cells that efficiently process events and learn end-to-end task-dependent event-surfaces. Compared to existing reconstruction approaches, our learned event-surface shows good flexibility and expressiveness on optical flow estimation on the MVSEC benchmark and it improves the state-of-the-art of event-based object classification on the N-Cars dataset.

CUDA Kernels

Inspired by the convolution operation defined on images, we designed the Matrix-LSTM layer to enjoy translation invariance by performing a local mapping of events into features. This is implemented by sharing the parameters across all the LSTM cells in the matrix, as in a convolutional kernel. The convolution-like operation is implemented efficiently by means of two carefully designed event grouping operations, namely groupByPixel and groupByTime. Thanks to these operations, rather than replicating the LSTM unit multiple times on each spatial location, a single recurrent unit is applied over different sequences in parallel.

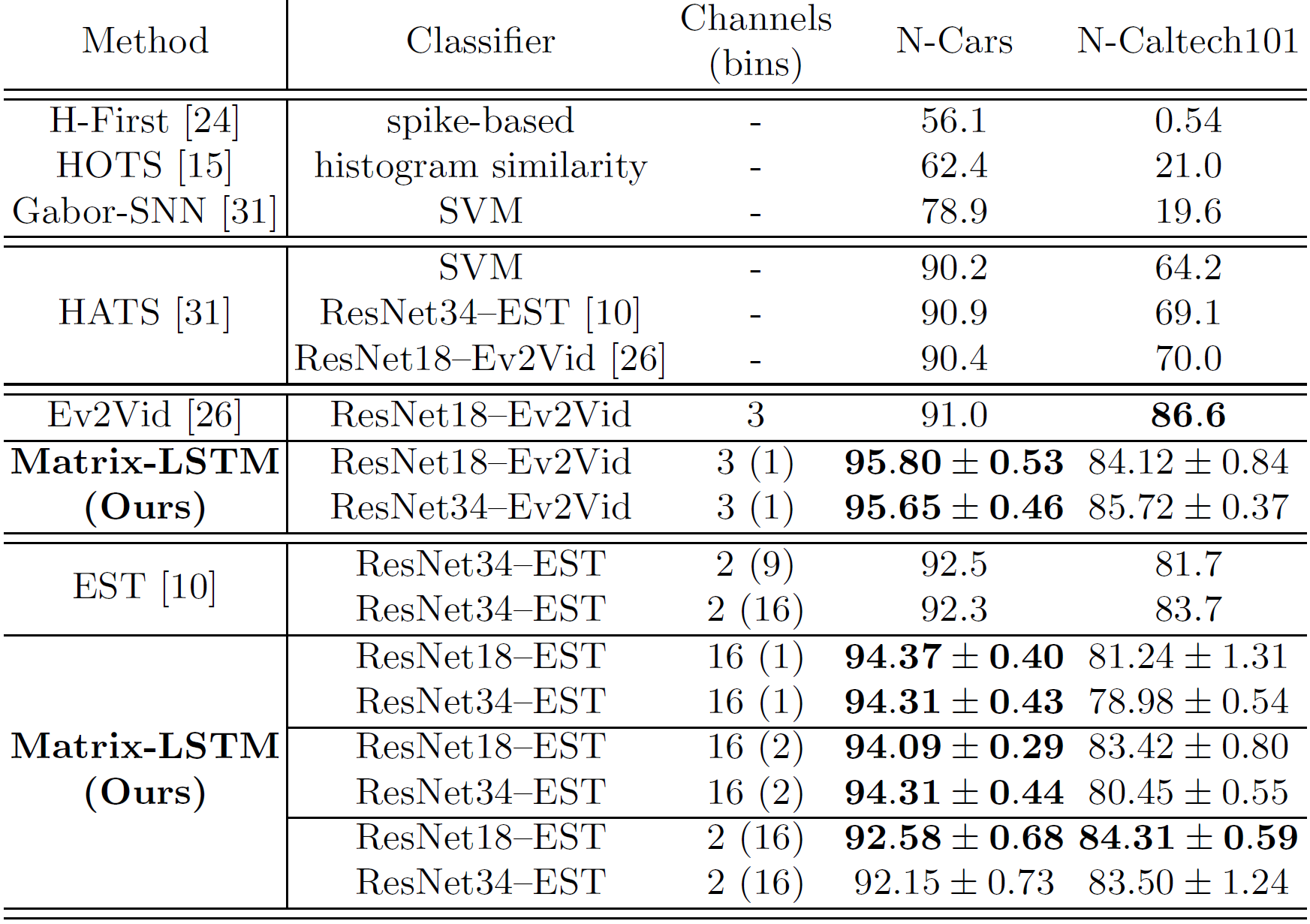

Classification

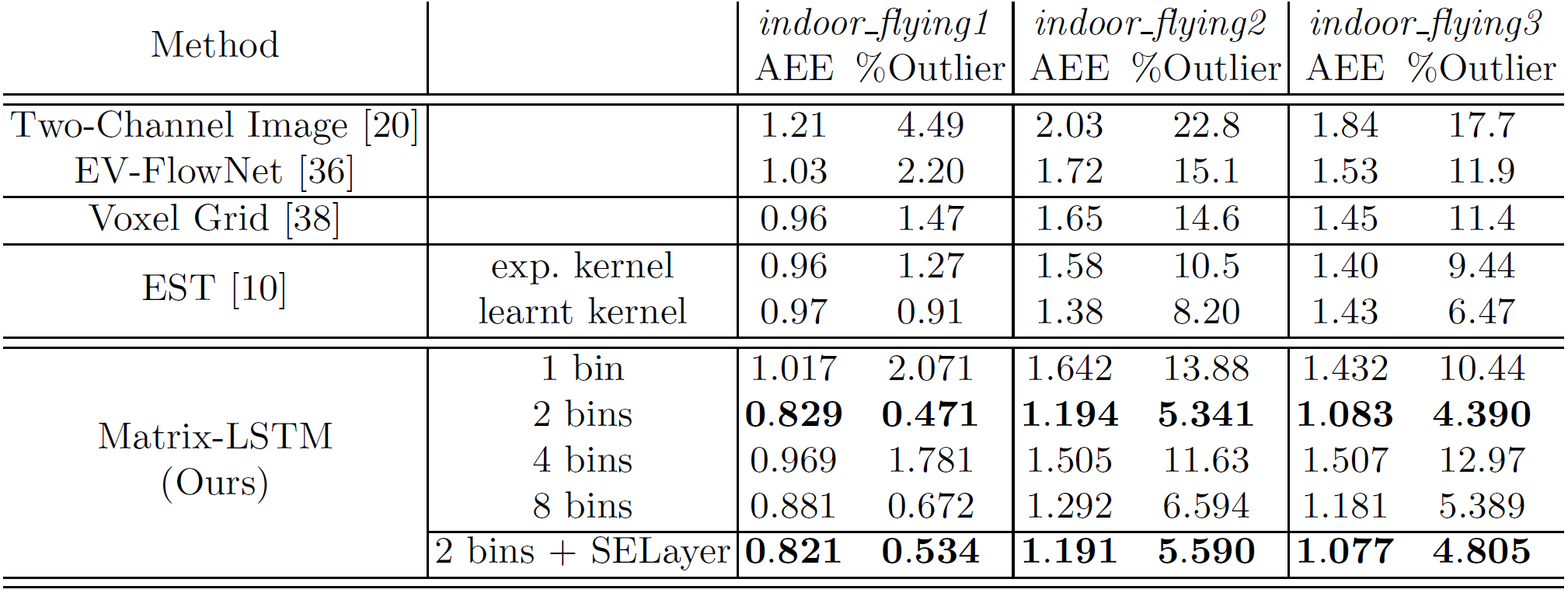

Optical Flow Prediction

| MVSEC indoor_flying sequences with dt=1 | ||

| MVSEC indoor_flying sequences with dt=4 | ||

Source Code

| [GitHub] |

[ArXiv]

[ArXiv]

|

Citation

|

|

@InProceedings{Cannici_2020_ECCV,

author = {Cannici, Marco and Ciccone, Marco and

Romanoni, Andrea and Matteucci, Matteo},

title = {A Differentiable Recurrent Surface

for Asynchronous Event-Based Data},

booktitle = {European Conference on

Computer Vision (ECCV)},

month = {August},

year = {2020}

}

|